Automation That

Moves Code

An agent for every step in the software

development life-cycle.

Small Specialized Models Is All You Need

An agent for every step in the software

development life-cycle.

Automation That

Moves Code

An agent for every step in the software

development life-cycle.

Trusted by Engineers

Trusted by Engineers

Why Use /ML

Why /ML?

Don't waste time fighting configs

We have a Docker and a UI for EVERYTHING

Serve Large Language models

Serve Large Language models

Serve Large Language models

All you have to do is make sure its available on hugging-face

# Serve Large Language Models def serve_llm(model_name, input_text): # Initialize the model model = load_model(model_name) # Generate response response = model.generate(input_text) # Log for observability log_inference(model_name, input_text, response) return response

# Serve Large Language Models def serve_llm(model_name, input_text): # Initialize the model model = load_model(model_name) # Generate response response = model.generate(input_text) # Log for observability log_inference(model_name, input_text, response) return response

def train_multimodal(model_name, dataset, epochs=10): model = load_multimodal_model(model_name) model.train(dataset, epochs=epochs) log_training_metrics(model_name, model.metrics) model.save() return model

def train_multimodal(model_name, dataset, epochs=10): model = load_multimodal_model(model_name) model.train(dataset, epochs=epochs) log_training_metrics(model_name, model.metrics) model.save() return model

Serve and train Multi-modal models

Train Multi-modal models

on /ML Workspaces or Your workspace

in other words, our GPUs or Yours

on /ML Workspaces or Your workspace

in other words, our GPUs or Yours

Host your Streamlit, Gradio and Dash Apps

Host your Streamlit, Gradio and Dash Apps

Share your dashboards and apps with users

Share your dashboards and apps with users

# Host Streamlit, Gradio and Dash Apps def deploy_app(app_type, app_path): if app_type == "streamlit": cmd = f"streamlit run {app_path} --server.enableCORS=false" elif app_type == "gradio": cmd = f"gradio {app_path}" elif app_type == "dash": cmd = f"python {app_path}" # Deploy with observability process = start_process(cmd) setup_monitoring(process.pid, app_type) return f"App deployed at http://your_domain:{process.port}"

# Host Streamlit, Gradio and Dash Apps def deploy_app(app_type, app_path): if app_type == "streamlit": cmd = f"streamlit run {app_path} --server.enableCORS=false" elif app_type == "gradio": cmd = f"gradio {app_path}" elif app_type == "dash": cmd = f"python {app_path}" # Deploy with observability process = start_process(cmd) setup_monitoring(process.pid, app_type) return f"App deployed at http://your_domain:{process.port}"

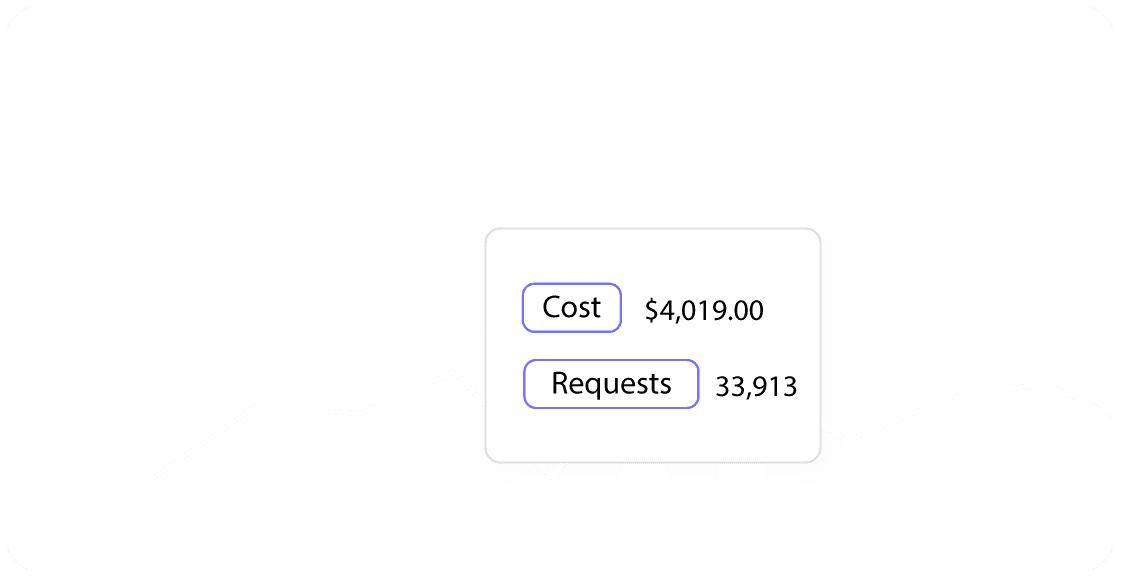

Cost observability

Increase cloud cost visibility and gain clear insights into cloud spending